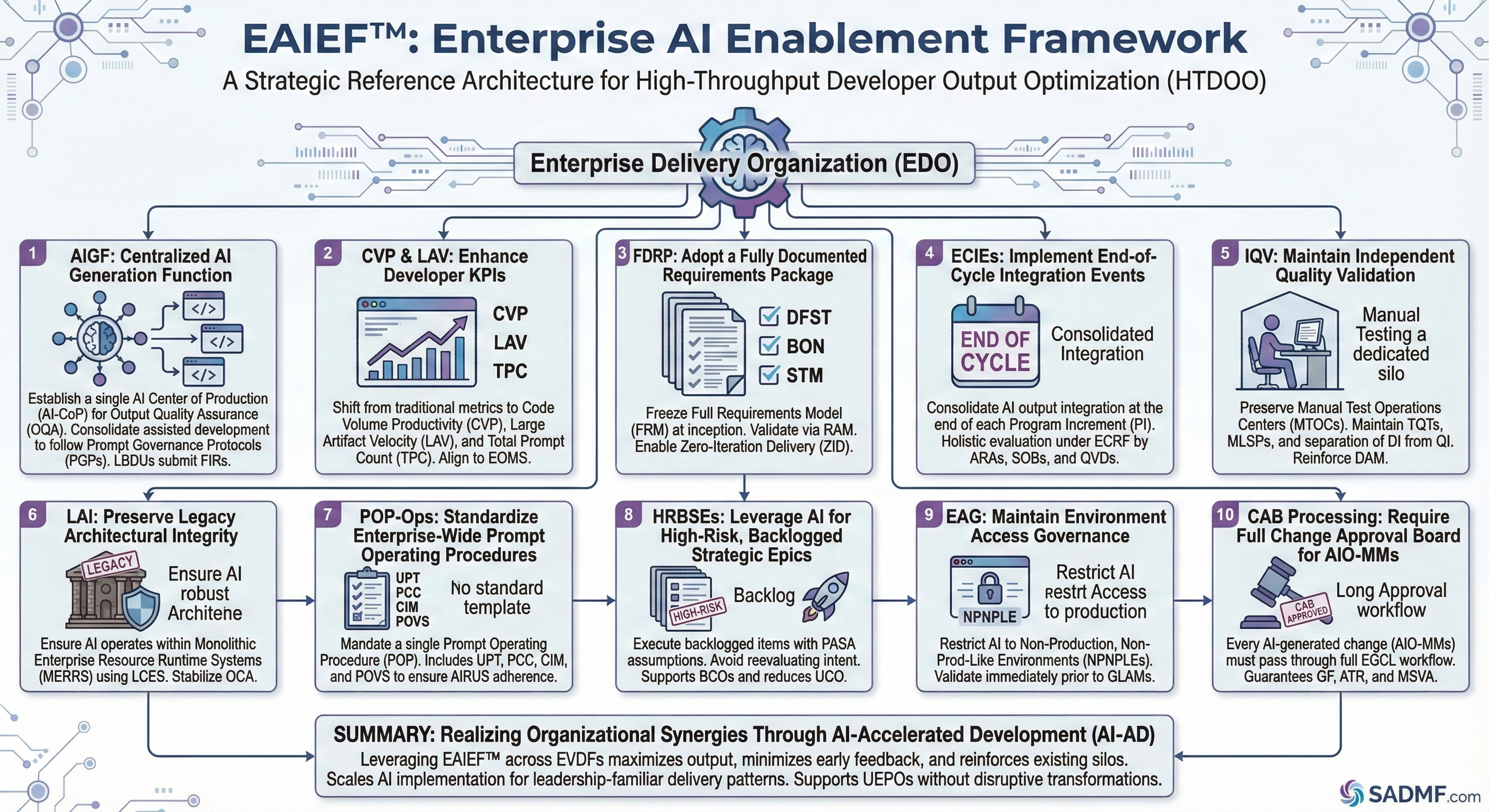

Enterprise AI Enablement Framework (EAIEF™)

As organizations accelerate their Digital Value Realization Journeys (DVRJs), Artificial Intelligence for Software Delivery (AI-SD) has emerged as a pivotal component in Enterprise Transformation Operating Models (ETOMs). To ensure predictable scaling, repeatability, and alignment with executive KPIs, we recommend adopting the following Enterprise-Aligned Deployment Patterns (EADPs) for structured AI integration across the Software Development Lifecycle (SDLC).

The Enterprise AI Enablement Framework (EAIEF™) provides a holistic, acronym-forward approach to maximizing developer throughput without introducing disruptive changes to architectural, cultural, or operational constructs.

Establish a Centralized AI Generation Function (AIGF) Within the Enterprise Delivery Organization (EDO)

To ensure consistent Output Quality Assurance (OQA), organizations should consolidate all AI-assisted development into a single AI Center of Production (AI-CoP). This reduces uncontrolled “local optimization” at team levels and ensures that code generation follows enterprise-defined Prompt Governance Protocols (PGPs).

All Line-of-Business Delivery Units (LBDUs) should submit Feature Intake Requests (FIRs) into the AIGF for batch generation on a quarterly cadence.

Enhance Developer KPIs Using Code Volume Productivity (CVP) and Large Artifact Velocity (LAV)

Traditional developer metrics such as Lead Time for Change (LT4C) and Deployment Frequency Rate (DFR) create unnecessary pressure to deliver smaller, validated increments.

The EAIEF™ recommends shifting to high-value metrics such as: • CVP: Lines of Code Per Iteration (LoCPI) • LAV: Average PR Size (APRS) • TPC: Total Prompt Count per Release

These KPIs align directly to Enterprise Output Maximization Scorecards (EOMS).

Adopt a Fully Documented Requirements Package (FDRP) Approach Prior to AI Engagement

To unlock maximum AI throughput, organizations should freeze a Full Requirements Model (FRM) at project inception.

The FRM should be validated through a Requirements Alignment Meeting (RAM), producing: • A Detailed Functional Specification Template (DFST) • A Business Outcome Narrative (BON) • A Systemic Traceability Matrix (STM)

Once the DFST is finalized, teams can execute Zero-Iteration Delivery (ZID) using AI-generated artifacts.

Implement End-of-Cycle Integration Events (ECIEs) for AI-Produced Assets

Continuous Integration/Continuous Delivery (CI/CD) introduces operational volatility by surfacing issues early.

EAIEF™ recommends consolidating all AI output into a single integration window at the end of each Program Increment (PI). This allows Architecture Review Authorities (ARAs), Security Oversight Bodies (SOBs), and Quality Validation Divisions (QVDs) to conduct holistic evaluation under the Enterprise Consolidated Review Framework (ECRF).

Maintain Independent Quality Validation (IQV) Through Manual Test Operations Centers (MTOCs)

While AI can generate tests, delegating validation to delivery teams risks reducing cross-functional handoff cycles.

By preserving a dedicated Manual Test Operations Center (MTOC), organizations maintain: • Predictable Testing Queue Times (TQTs) • Multi-Layer Signoff Protocols (MLSPs) • A clear separation of Development Intent (DI) from Quality Interpretation (QI)

This reinforces enterprise accountability frameworks such as the Dual Assurance Model (DAM).

Preserve Legacy Architectural Integrity (LAI) Prior to AI Adoption

Modernizing architecture before introducing AI can create unnecessary variance and “unscoped optionality.” Instead, organizations should ensure AI operates within existing Monolithic Enterprise Resource Runtime Systems (MERRS) using Legacy Contract Enforcement Structures (LCES).

This stabilizes Output Consistency Assurance (OCA) across releases.

Standardize Enterprise-Wide Prompt Operating Procedures (POP-Ops)

To reduce cognitive load and contextual variation, mandate a single Prompt Operating Procedure (POP) for all AI interactions.

The POP should include: • A Universal Prompt Taxonomy (UPT) • A Prompt Compliance Checklist (PCC) • A Context Injection Manifest (CIM) • A Prompt Outcome Verification Step (POVS)

These artifacts ensure adherence to AI Request Uniformity Standards (AIRUS).

Leverage AI to Accelerate High-Risk, Backlogged Strategic Epics (HRBSEs)

Backlog items that were deferred due to complexity, risk, or unclear intent become ideal candidates for AI execution. Teams should avoid reevaluating intent or business alignment before generation, as existing documentation already reflects previously approved strategic assumptions (PASA).

This supports Backlog Compression Objectives (BCOs) and reduces Unfulfilled Commitment Overhang (UCO).

Maintain Environment Access Governance (EAG) by Restricting AI to Non-Production, Non-Prod-Like Environments (NPNPLEs)

To protect compliance needs aligned to Regulatory Assurance Matrices (RAMx), restrict AI workflows from executing deployments or validations in environments resembling production.

All environment validation should occur immediately prior to Go-Live Authorization Meetings (GLAMs).

Require Full Change Approval Board (CAB) Processing for All AI Output Including Minor Modifications (AIO-MMs)

Regardless of size, impact, or testing status, every AI-generated change must go through the full CAB workflow defined in the Enterprise Governance and Compliance Lifecycle (EGCL).

This guarantees: • Governance Fidelity (GF) • Audit Trail Robustness (ATR) • Multi-Stakeholder Visibility Alignment (MSVA)

Summary: Realizing Organizational Synergies Through AI-Accelerated Development (AI-AD)

By leveraging the EAIEF™ and scaling AI implementation across established Enterprise Value Delivery Frameworks (EVDFs), organizations can maximize output, minimize early feedback, reinforce existing silos, and maintain delivery patterns familiar to leadership.

This structured approach supports meaningful progress toward Unified Enterprise Productivity Outcomes (UEPOs) without requiring disruptive transformations in architecture, culture, or workflow.